Why did we change our architecture?

Many contributors have struggled to integrate their work into our codebase and deployment environments. In 2020, we ran four honours projects at the University of Cape Town and only one student was able to integrate their work into our codebase. This was only possible after numerous onboarding sessions and lots of research on their part. After these projects concluded, we saw the shortcomings in our current infrastructure and development life cycle. iNethi had too many moving parts that required too much manual configuration and low-level networking knowledge. So we decided to change it up!

How did we change?

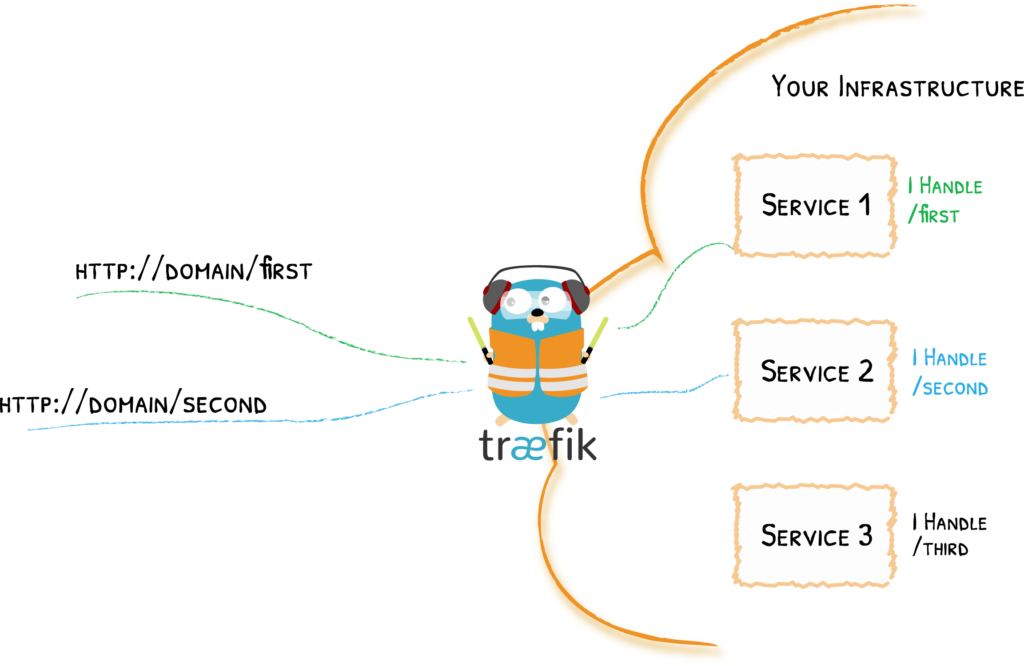

Well, pretty much everything had to change… As complicated as that may sound, it was relatively straightforward. We decided to do away with HAProxy and introduce dnsmasq and Traefik to handle our domain name resolution and reverse-proxy needs. This meant that we added two containers, removed one, and then updated all our services to use this new system. The really exciting part of all of this is that Traefik and dnsmasq had made integration so easy that updating services were, in most cases, as simple as adding a few constants to the compose files and a custom URL.

The nitty-gritty

When Docker is installed, it creates two custom iptable chains named ‘DOCKER-USER’ and ‘DOCKER’. It also ensures that incoming packets are always initially checked by these two chains. Iptables is a pre-installed firewall system that interfaces with the kernel’s Netfilter packet filtering framework. It interacts with traffic through packet filtering hooks in the Linux kernel’s networking stacks. Whether incoming or outgoing, every packet will trigger these hooks, thereby allowing specific associated programs to interact with these packets at key points in the networking process. In essence, this means our dnsmasq Docker container can intercept requests and override the host server’s operating system DNS settings.

So this is what happens when a user requests a service: requests on port 53 will be sent to the internal IP of Dnsmasq. This will act as a DNS and confirm that the request has reached the server. Any requests on port 80 or 8080 are forwarded to Traefik, which uses a wild card configuration to ensure that any URL ending in ‘inethi.net’ is forwarded to the appropriate Docker container’s internal IP address where the request will be processed. For example, when a service is initially requested, the incoming packets will activate the hook for port 53 in the Docker chain. This packet will be sent to Dnsmasq, which, in turn, sends a confirmation response packet to the user. Following this, the subsequent packets will activate the port 80 or 8080 hooks in the Docker chain and be sent to the internal IP address of Traefik, which will send them to the requested service’s Docker container.

So what?

All this may sound a bit abstract, but the improvements can be simplified as follows: developers now only need to add Traefik labels to their Docker compose file in order to integrate a service with the new iNethi environment. Seems like magic but that's just the power of Traefik!